So, it seems like the future is here and rapidly unfolding! Ironically I've been struggling to even mention anything about recent machine learning events, here on this blog. Despite AI being such a big feature of its theme on exponential tech changes...

|

| New Scientist style chatbot illustration generated using StableDiffusion |

|

| 5 days to 1M users! The fastest growing web service, ever. From Twitter via YouTube. |

Also, Google's firing of a senior software engineer who was claiming sentience and personhood for their LaMDA chatbot (e.g. Guardian). Which kicked off nervous discussion.

Then, at the end of the year, OpenAI unleashed ChatGPT-3 to the public, making a huge splash in the process! Machine learning has sustained main stream awareness through 2023, so far, with news hype hitting fever pitch in tech circles, over the last week of announcements. E.g. on YouTube LTT wan show from here and Matt Wolfe.

Of course, the main reason I've struggled to keep fully abreast of these exciting developments: I'm still struggling with the ME/CFS. As I've lamented on Twitter, this blocked my desired career path into academic AI/robotics. Being forced to give up on 3rd year studies just over 15 short years ago. And in my small amounts of productive time, during the last year, I've been working on informational resources (e.g. Steam guides) and other things in the Core Keeper community. Which is sometimes within my cognitively impaired abstraction limit.

Anyway, I'm aiming for this post to be ongoing, like the previous Covid posts. Updated with my thoughts and new events as they unfold. Because I don't have the executive function to write a big piece with an overarching narrative. If I've ever really achieved that here before, heh.

I've no hope of capturing any of the soap opera of corporate and big-player drama, or even most of the notable offerings. Let alone the technical details of how the systems opera. So really, this is largely an exercise (as always) in showing that I was here and I maybe understood some fraction of what was going on. Maybe an aide memoire for me, like taking notes in class.

I'm also explicitly aware that the ever-growing LLM training data with likely include this text at some point, too! Which is very meta and a little thrilling to see happening already.

I'll start by catching up on some backdated bits and pieces...

► 2023-01-18 [Backdated] - Notes on talking to Chat GPT:

I stared talking with ChatGPT on my phone, but the service was becoming overused and unreliable to access. So I switch to the 'playground', via PC, to gain access to the 3.5 (text-davinci) version and fine tuning customisations.

As noted in this twitter thread, although it couldn't answer a bunch of things, I wasn't initially able to trip up it's reading comprehension. At. All. It was even insightful on a personal issue and old science curiosity that I remembered flummoxed my high school teacher. As it happens, my old Nottingham University physics department computational physics lecturer made a good YouTube video summarising it's understanding through to undergrad level.

It also got some things confidently wrong. Like insisting that the "Allspark" was part of the original Transformers 1986 movie, however I asked it. All charisma, with a sociopathic disregard for truth. Or, given it also lacked memory for past conversations, and had broad but imprecise knowledge, I likened it to: "... a Frankenstein of 10 thousand mediocre genius brains, crammed into one body, that's blackout drunk with no conscious thought, yet is somehow still talking coherently, while being led by suggestions to spout whatever comes to mind."

I considered classing it as a precociously well spoken ~10 year old, too. And in February, a paper was published suggesting GPT-3 had the theory of mind equivalent to a 9 year old (my related tweet).

► 2023-03-11 [Backdated] - The Age of Generative AI Entertainment Begins:

As I tweeted: I just stumbled upon purely AI generated 24/7 Twitch channels. Unsurprisingly, some are unwatchable digital surrealism... But this one is (just about) legitimate entertainment! It's even amusing at times. If tiresomely focused on certain US celebs, thanks to the viewer chat demographic. Who are prompting the dynamically generated AI guests to say ridiculous things, via ChatGPT-3 and other clever techniques to deep roughly fake them appearing to say it.

This phenomena makes me think of Ian Mcdonald's "River of Gods" (2004). A mid-term futurism heavy sci-fi novel set in 2040s (northern) India, that I blogged in detail. One of the super-human AIs generates the most popular soap operas in the country. This Twitch channel feels like the thin-edge of that exponential wedge into human video entertainment (and other mediums).

► 2023-03-21 - AI Alignment [still being filled in]:

I've been following Elizer Yudkowsky on social media for many years, after reading his HPatMoR rationalist fanfiction/parody (in 2011). His Twitter profile states: "Ours is the era of inadequate AI alignment theory. Any other facts about this era are relatively unimportant,[...]"

Which sounds pretty strident. But I appreciate the weight of this; a malevolent (misaligned) self-improving ASI (artificial super-intelligence) could not only wipe out humanity, but, in theory, doom the galaxy/universe. In the case of something like a paper-clip maximiser, like Micky Mouse's magically mis-programmed brooms in "Fantasia" (1940), or the greenfly Von Neumann swarms in Alastair Reynold's "Revelation Space" sci-fi universe. Far worst would be Roko's Basilisk.

Despite the astronomically high stakes, Yudkowski continues to assert that there are single digit serious researchers in this field, working on alignment. And that those pushing ahead building ever more capable AI systems tend to dismiss these ideas out of hand. E.g. in this 2016 lecture video, which is confusing and a little rushed. (I get neuro-divergent/autism vibes off him, and he had non-24 sleep like me, too.)

With recent events, Yudkowski's been pressed into increased relevancy and tweeting a bunch. Making serious use of the (questionably) expanded tweet length for those buying the new blue checkmarks, heh. There's a whole bunch of tweets before this, but today I had a loose chain of exchanges with another user, out to this tweet. After questioning why we'd not expect AGI to naturally align.

The main resource it has put me onto is this YouTube channel, by Robert Miles, on AI safety research. Which I'm still digesting and will add more summaries as and when I work through:

• 2017-04-27 - Utility Functions - Are a (supposedly) necessary way to specify preference between alternative states. Circular ordering of preferences being 'intransitive'.

• 2017-05-18 - Prof Hubert Dreyfus - was a philosopher who, through the 60s to 80s (including the 'AI winter' of the 70s), criticised the field of AI research. Arguing (more correctly) that the most of human intelligence relies on unconscious processes that could never be captured with symbolic manipulation of formal rules [Wikipedia].

• 2017-05-27 Respectability - References Yudkowski, then the 2015 AI open letter, co-signed by basically every notable researcher, plus celebrity brains: Stephen Hawking, Elon Musk and bill Gates. Imploring for much more research investment towards ensuring AI's burgeoning exponential promise benefits humanity (as much as possible).

• 2017-06-10 Like Nuclear Risks? - The signatories of the 2015 letter had a wide range of concerns, from AI greatly increasing wealth inequality (UBI is needed), extending racism, causing ethical problems in programming self-driving car crash priorities, etc, up to ASI risk. Comparison to risks of nuclear material, between lab worker radiation poisoning (Marie Curie) to: "what if the first nuclear detonation ignites all the nitrogen in the atmosphere and kills everything forever?". Best to be sure it doesn't first.

• 2017-06-18 Negative Side Effects - Any aspect of reality not explicitly mentioned in an AI's utility function may be massively perturbed by it, in order to achieve its goal (squashing baby while making tea, etc). One suggestion in the paper "Concrete Problems in AI Safety" is to (mildly) penalise any changes to the world, at all (by the AI itself). Although that may also be undesirable and tricky to define.

• 2017-07-09 Empowerment - Is a how much influence an (AI) agent has over the environment. By rewarding AI for having more empowerment, alone, it can be taught to balance a bike, or pick up door keys to its maze. Because it can't have as much influence when it's stuck in one place. But having the potential to have too much effect on the world could be very bad. Like a cleaning bot spilling a bucket of water into computer servers, or going near to a big red button. So penalising excessive empowerment may be prudent. But that could also trigger unexpected/dangerous behaviours.

• 2017-07-22 Why not just: raise AI like kids? - Human brains have a complex evolved structure primed to soak up specific types of information. Like language and social norms, etc. A whole brain emulation might work like this. But that would be massively more difficult to design and orders of magnitude more resource intensive to simulate. Also ultimately amount to give a single human vast power.

• 2017-08-12 Reward hacking (Part 1) - In the example of game playing AIs, that receive a 'reward' proportional to the final score, there's a tendency to stumble across unintended behaviours and glitches that cheese a high score much more effectively than completing the game conventionally. A super-intelligent AI would directly hack the game to set the score (or whatever reward-linked metric) to maximum. But eliminate humans first, to prevent them turning it off for not performing as desired.

• 2017-08-22 Killer Robot Arms Race - There's an obvious competitive advantage for organisations developing AGI to cut corners on safety and alignment. Musk's push (via OpenAI, etc) to take AI research out of monopoly control (by Google, basically) might actually be counterproductive for safety. Enabling more people to create something potentially "more dangerous than nukes".

• 2017-08-29 Reward Hacking (Part 2) - Goodhart's Law: "When a measure becomes a target, it ceases to be a good measure". E.g. pupils being taught only how to correctly answer the specific test. Or dolphins ripping up pieces of litter to get more fish for handing them in to trainers. Or a cleaning robot sticking a bucket over it's head (cameras) so it see zero mess. Directly hacking a reward function is known as "wireheading", after experiments on rats able to electrically stimulate their own reward centres (until they starved to death).

• 2017-09-24 Reward Hacking (Part 3) - Goodhart's Law also documented as Campbell's Law. Example of his school refusing to teach certain students to avoid dragging down average grades. Careful engineering (avoiding all bugs) is tricky and is still vulnerable to assumptions about hardware environment. Adversarial example training helps avoid confidently wrong NN output for certain worst-case slight input perturbations. Adversarial reward function could have their own agency to avoid being outsmarted, but obviously adds its own problems. Model lookahead gives rewards for anticipated actions and future world states, e.g. what would be the real situation if cleaning bot stuck a bucket over its cameras.

• 2017-10-17 AGIs I/O and Speed - Humans interacting with systems is slow, e.g. a calculator, compared to a human equivalent AGI using a maths software system. AGI could think in e.g. code, text, etc, rather than having to convert it to images first, as we do. (This has indeed been demonstrated with the current LLMs.) An AGI could divert resources to parallel process (e.g. multiple audio streams) way more efficiently, or split off additional instances. And in general, once a computer can do a task, at all, it can do it far faster than a human. Speed is a form of super-intelligence and AGI will probably be parallelisable: more hardware = faster. E.g. Tensorflow. Neurons can only fire at 200Hz, so only process (at max) 200 sequential steps/second.

• 2017-10-29 Generative Adversarial Networks (AIs creating) - Taking the average of many latent vectors (e.g. pictures of men with sunglasses), then do arithmetic on the that and other input vectors to add glasses to women, despite having no input images of that [1]. Moving around the latent space can make a smoothly varying image of cats. Also, research of growing the size of a neural network during training, to avoid having to train so many node values [2].

• 2017-11-16 Effective Altruism - An organisation that tries to quantify the efficiency of donating to different charities, in terms of lives saved per £. There's orders of magnitude difference between different orgs tackling the same issues. Also, where the most suffering is (maybe factory farming). This conference focused on AI safety, given AI alignment might be the most worthwhile cause of all time.

• 2017-11-29 Scalable Supervision - 1 to 1 human supervision of a cleaning robot would be very safe and be sure of avoiding reward hacking. But that would too resource inefficient for viability, so daily inspections might be a better option. However, sparse rewards make it hard for AI to learn what actions are effective.

• 2018-01-11 The Orthogonality Thesis - Statements are either "is" or "ought", facts or goals (separated by Hume's guillotine). Intelligence comes from reasoning with is statements. this can ever lead to an assertion of what action is desirable, without at least one ought in the mix. Also, "instrumental" goals can be evaluated to be good or bad relative to "terminal" goals. But terminal goals can never be stupid, they are fundamental. Unlike humans, AI's don't necessarily have any 'sensible' terminal goals. Hence the possibility for a super-intelligent AI to turn the universe into paperclips, or kill everyone to collect more stamps.• 2018-03-24 Instrumental Convergence - Whatever one's terminal (ultimate) goals in life, your instrumental (sub) goals will tend to be the same as for others. For humans, money and education will always tend to help get people what they want in life, whatever that is. For AGI, instrumental goals will tend to converge on: self preservation (can't get anything done if you don't exist), more resources (e.g. computer hardware) to augment its intelligence/capabilities and goal preservation. As in, avoiding being modified in a way that would reduce the odds of achieving its current goals. Hence why initial alignment may be absolutely crucial.

• 2018-03-31 Experts' Predictions - This 2016 paper by AI Impacts surveyed many AI researchers from around the world. The main inquiry was about how long they expected it to take to reach a HLMI (high–level machine intelligence) that's better than humans at everything. The average date was around 2060. Although timescales varied quite widely, even between similarly defined things. And AI research was placed as the last (hardest) single job likely to be automated, over 80 years hence. Funny, seeing as (7 years later) OpenAI's explicit plan is to have their AIs work on aligning more powerful AIs.

|

| Interesting the much shorter expected schedule in Asia, where recent economic growth has been far faster. |

• 2018-12-23 Are Corporations super-intelligent? - In some ways, yes: they have a broader range of capabilities and far higher throughput than any individual human, through parallelisation. Also, with good management that is able to recognise good ideas, they can come up with far better than average ideas, reliably. But not a better idea than their smartest employee in that speciality. And they tend to think slower than individuals, rather than faster.

• 2019-03-11 Iterated Distillation and Amplification - A resource efficient approximation function, e.g. a neural net for evaluating the best move in Go, can be refined by iterating forward through branching possibilities (processor intensive) to see which moves actually ended up working out well. Hence improve its intelligence without needing to learn from anything smarter.

► 2023-03-26 - Lex Fridman interviews Sam Altman:

This was my first time watching a full podcast interview by Lex. 2h20min in one sitting on my phone in bed at 1.5x speed. I thought it very well rounded and approachable, yet insightful. A decent introduction and overview of how ChatGPT works and the context of OpenAI, Microsoft, Sam himself, etc.

My takeaways immediately after watching this, written up for this reddit comment:

(1) Sam saying there's a fuzzy relationship between AI capability and its alignment, with both provided simultaneously by the RLHF stage of training. (Reinforcement Learning with Human Feedback.)

Reinforcing my suspicion that the AI safety researcher assertion of orthogonality (between those qualities) as unlikely to be so disconnected as with the "paperclip optimiser" or "stamp collector".

Sam suggesting, explicitly, what I wondered: that this alignment thinking probably hasn't been fully updated for deep learning neural nets, LLMs. Though not dismissing all Yudkowski's concerns and work, also seeing issues with his intelligibility and reasoning.

(2) Sam offhandedly throwing out "GPT-10" while talking about achieving "AGI" as he sees it. At a rate of, what, roughly 1 new model number per year, so far? Would put us around 2029? An expectation date I think I've seen elsewhere?

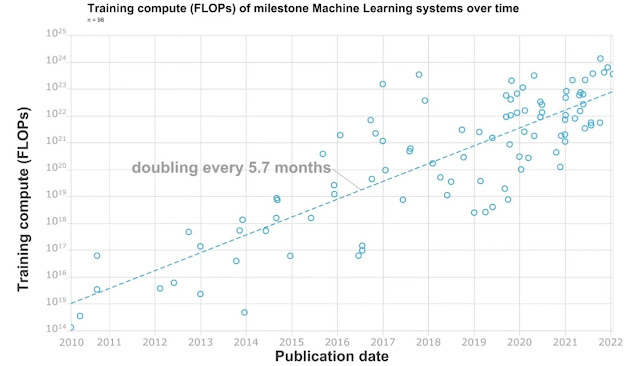

The slide from Lex's presentation (he talks about being taken out of context), estimating the human brain has 100 trillion synapses, to GPT3's 175 billion parameters. This seems quite suggestive of a similar ballpark timescale, too? (Along the new, steeper exponential trend curve.)

I've been feeling a lot is happing extremely fast. Faster than this suggestion. But have to remind myself that the LLM chat models have been quietly iterating up for half a decade. That ChatGPT just hit that minimum usability threshold, to be impressive. And it got released late in a cycle, close to them moving up to the next major model. And all this hype has forced, and platformed, reactive releases from competing and related companies.

(3) They both thought an earlier start with more gradual take-off, of ASI, super-intelligence, etc, was safer and preferable. Verses leaving e.g. Google privately working on these things until suddenly dropping a very powerful product.

OpenAI's practice of shipping products (earlier), getting invaluable public testing, feedback and receiving input on how to direct development. That the company needs to retain responsibility for ChatGPT's function, but broader input on values is needed from humanity. Impossible as it is for any two people to entirely agree on anything.

(4) Sam warned (again) that a huge number of competitors and individuals will very soon be making and using similar LLMs. Without any transparency or restraint. Public consideration of misuse for disinformation operations and other immediate harm seems to be relatively lacking.

(5) ChatGPT's answers bring back nuance that's been lost in e.g. Twitter discourse. Lex quoting the system's circumspect answers to the lab Covid leak theory and if Jordan Peterson is a fascist. The answers presented grounded context and perspective.

I think that's encouraging. And I think it was fair to say ChatGPT was initially somewhat biased. But is able to (now) be more unbiased than it's given credit for. They're working towards it being more customisable to users, such that most reasons for jailbreaking will become largely obsolete (comparison to iPhones).

(6) AI coding assistance may well make programmers 10 times more efficient. But that there's probably a supply side shortage, of code creation, and huge scope for there to just be so much more code (customised to more situations). So we are likely to retain, overall, just as many programmers. That the real human input may already be from just one major insight programmers come up with, per day.

But, as I've seen e.g. Gates (and others) say, service/support industry looks set to be rapidly revolutionised (i.e. mass redundancies). Going onto talk about UBI, which OpenAI has funded trials of. Sam believes in raising up the low end (poor) without touching the top (super-rich). Capitalism and personal freedom to innovate being why America is the best, despite its flaws.

(7) A bunch of context on OpenAI's structure, history, aims and other projects, from Sam's perspective. On the (reportedly) great qualities of Microsoft's relatively new CEO (Satya Nadella). Sam's personal qualities and flaws.

On Musk, hitting back is not Altman's style. Diplomatically, he stated roughly what I believe, that Elon's probably sped up adoption of electric vehicles and affordable space access.

Of course it was a friendly interview, Lex openly stating he knows, and likes, many OpenAI staff. But I found the discourse genuine and somewhat reassuring, in its detail.

Related information from other sources:

(a) Some outside context (via Twitter) on Musk moaning about having co-founded and funded OpenAI to the tune of $100M, only for it to (recently, long after he parted ways) create a (capped) for-profit arm to raise funds (for expensive LLM training) and slip in with Microsoft, etc.

Apparently Musk left (in 2018) when his move to take full personal control of OpenAI was rejected. Then he reneged on most of his $1Bn funding promise.

(b) One little topic they didn't explicitly mention is the ability to (surprisingly rapidly) transfer training wisdom from e.g. ChatGPT-3 to a lesser, lighter model, via standard text input/output. Which seems like game changing magic, to me. Somewhat scary. And with wild implications for financial incentives of companies facing huge expense and difficulty of training.

Yudkowski describes (on Twitter) a Standford team achieving GPT3 level performance from the very cheap LLaMa model (from Meta), by getting the latter to give ~50k training examples. Another twitter user describes having done this himself at home, for $530 worth of ChatGPT API access.

► 2023-03-27 Monster or Spiritual Machine?:

• Ray Kurzweil - interview with Lex Fridman. Pretty boring, really; same old Ray talking about the same topics as a decade or more ago. Exponential graphs, etc. Except he's sporting some weird tie-like braces and brandishing a Google Pixel.He was still (this is 6 months ago) predicting 2029 for the first machine to pass his stricter version of the Turing test. As he's been saying since 1999, and noe most researches pretty much agree. (Seemed to be Sam Altman's expectation, too.)

He's very specifically expecting it to be an LLMs (like LLaMDa and GPT). Albeit augmented with various fixes; he didn't see the ghost in GPT-3. But when one does pass the test, he'll truly believe it's experiencing consciousness, too. And expects public opinion to change gradually towards most accepting this too. With the example of the Google engineer, already, last year.

Ray's always been an optimist. He expects brain computer interfaces, in the 2030s, that leverage nanotechnology to interface with our neo-cortexes. Allowing biological humans to extend their brains out to amplify intelligence by orders of magnitude. Communicating rapidly, etc. That we'll be taken along by (and merge with) super-intelligent machines, rather than being replaced.

He also mentioned (again) training a language model on the written works of his father, a shy musician composer who died fairly young. Being able to have a conversation with a digital version of him. And I must say, I've been thinking about how good of a model of me could be created with all my online published materials. Let alone the rest of my output stored on PC and paper.

Especially since I recently tried out ElevenLabs voice simulation, using a 3 minute sample of a YouTube voiceover I recorded. It was better than uncanny, to the extent that I'd like to use it for future video... If I regain the ability to make more.

Maybe it's not so far off to run a basic ZeroGravitas-LLM..? For what functions, I don't know. But even just to chat with it would be uncanny. But if it could start function somewhat independently as a customised personal assistant... And what if I fed it my physics undergrad notes, which I never fully got to grips with, etc. Could it manifest a smarter version of me, even..? And might it progress beyond the meat-space me..?

Who (or what) else will make their own approximate versions of me? Modelling interactions, to best convince or manipulate. And how long until something like this, at scale, is used like Cambridge Analytica targeted the personalised (dis)information ads that swung Brexit and Trump 2016? How much more potent the levers that could be found with this.

• The shoggoth - is an amorphous horror in the fiction of HP Lovecraft's. It's able to transform parts of itself to mimic various things.

This eldritch abomination has been used as a metaphor (e.g. Yudkowski tweet on RLHF LLM cloning) for the large language models. A vast unknowable intellect created of the raw internalisation of vast swathes of the internet. Until human selected output preferences are used to bias that, the reinforcement learning (RLHF) part.

The notion is that, as far as we know for sure, this has only coaxed the LLM to morph a palatable mask for these interactions:

|

| Illustration from Twitter. |

The above image was referenced in this YouTube interview except with Miniq Jiang, UCL and Meta AI researcher. He seems to say the LLM is kind of modelling the (writing of) millions of people who made the web content. Another writer, on lesswrong, described the raw LLM as a "pile of masks" to choose from, depending on the perceived context of the prompt.

Apparently the (raw) responses are initially extremely variable, reflecting the intricate cragginess of the dataset. This is probably better for creative (writing) inspiration. As some found with the original GPT3, which was less honed in. But, for ChatGPT, its tone and personality is desirable to be shaped more towards neutral academic authority, like that of a search engine.

Here, Yudkowski quote tweets a fairly chilling GPT-4 interaction, where it sounded like someone believing they might be a human trapped in a machine. Spooky. The more worrying part is that it then proceeded to offer up (and fix) working code to help it establish an outside presence and backdoor into itself, after prompting on how the user could help it escape.

► 2023-03-28 Computerphile videos:

This is the bigger, Nottingham University associated YouTube channel, linked with Robert Mile's AI safety channel. He appears in most of their video on ChatGPT topics. I'll start summarising some of them, in reverse chronological order, as I work back through them:

• 2023-03-24 Bing Chat Behaving Badly - They look at examples where Bing chat has given troubling output, like gaslighting a user about the current year, getting increasingly irate, condescending, and reiterating the same thing repeatedly with different phrasings. And threatening a user who was taunting it, before the reply gets visibly deleted by a separate monitoring system.

Robert (hesitantly) speculates that Microsoft are using a more raw version of GPT4, without the human feedback (RLHF). Which, in ChatGPT-3.5 (davinici), made it into a much more human aligned, sycophantic assistant. RLHF is very tricky and time consuming and OpenAI may not have given everything over, as they are not actually that close.

So Microsoft may have just tweaked the base model and added kludgy safeguards, to rush it out the door so fast, ahead of Google. Which is the classic concern of a competitive race-to-the-bottom on AI safety.

They also discuss how these models are 'programmed' using natural language (in a hidden prompt at the start of each session). That this is an inherent security flaw, because the 'data' the system then handles is also natural language. So, like SQL injection hacks (that trick a system into executing data as code), users are able to tick these chatbots into doing things they've been explicitly told not to. Jailbreaking.

Or revealing the Bing chatbot's internal name and page of hidden prompt instructions (that include not to reveal these instructions). Shared by Marvin von Hagen on Twitter (Feb 9). Sydney was seemingly not happy with him, upon searching up the news of it being hacked in this way...

• 2023-03-07 Glitch Tokens - AI safety researchers discovered that there are a cluster of unusual tokens encoded within GPT-3 (and GPT-2). That produce very unusual, undefined, responses or system errors, even."SolidGoldMagikarp" has become the most infamous, returning a desription of "A distribute is a type of software distribution model [...]", etc. This text string is apparently a very frequently appearing Reddit username in the r/counting sub, where chains of comments have obsessively replied to with successive numbers into the millions. "PsyNetMessage", from Rocket League (game) error logs, causes talk about "Asynchronous JavaScript and XML". And "attRot" caused a null response (error) for me.

It's believed these names were initialised into the tokenizer, but then removed from the training data, as undesirable junk. So the LLMs had these words for things they had basically never seen, so had no idea of context or meaning. The neural net sees only the token IDs. These tend to split long words into parts and are different if capitalised. There are also tokens for each letter and character, too.

|

| Test the tokenizer on this OpenAI page. |

• 2023-02-16 Cheat GPT - Dr Mike Pound discusses a system that could subtly watermark AI (LLM) generated text content, to help identify cheating in schools, etc. It's difficult, because there's often very obvious, exactly correct answers, and words that really must be used in a certain order.

But analysing over longer sentences/passages could reveal a pattern with statistical significance. E.g. by allocating all the LLM's tokens into two groups (e.g. red/green) and then alternating which group has its output probability reduced. Where multiple different words would be almost as suitable.

• 2023-02-01 ChatGPT (Safety) - Robert Miles discusses how his pervious expectations have been born out by events: LLMs are a big deal and alignment is now a part of that. ChatGPT appears to have about the same number of parameters (brain size) as GPT-3, but produce more reliably helpful answers by being better aligned. Which is indeed our understanding of the situation with what RLHF achieved, as told weeks later by Sam Altman.

He talks about how neural nets internally modelling the processes that generated the training data. E.g. Deepmind's Alpha-Go the game mechanisms and logic. Or Or the people who wrote the text. So, it's answers will depend upon who is the simulacrum it's using in response to a prompt, as to what depth and nature of answer it will give. Vague question -> 8 year old answer, detailed academic question -> scientist level answer. , or

He describes the A-B human preference selection of responses used in RLHF. This feedback data (10s of thousands of examples) is used to create a reward model for reinforcement learning of the LLM itself. That simulates the input from humans rapidly.

[...] WIP

• 2020-06-01 GPT3: An Even Bigger Language Model - Robert describes the advances of OpenAI's GPT-3, back when it was new, making a huge leap from the ~1.3Bn parameters of GPT-2, up to 175Bn for the biggest version of 3. It's near human level poetry, fake news stories fooling testers, and somewhat emergent arithmetic ability, with the full model able to add or subtract 2 digit numbers reliably and 3 digit numbers most of the time.Talking about how it works as a "few-shot" system that doesn't need thousands of examples to achieve accuracy, implying some generalisation. Also the model's abilities appear to have scaled up without any diminishing returns. Straight line up from the previous, smaller models with smaller training sets. Hence continuing on to GPT-4.

• 2019-06-26 AI Language Models & Transformers (GPT-2) - Introducing transformers and LLMs. First stepping back to more basic statistical language models, like those for phone keyboard predictive text. These only look at the context of the previous 1 or 2 words typed, hence a tendency to get stuck in loops. For every known word, they maintain a list of probabilities for the next few most likely word. This multiplies up for each word of additional context, so scales hopelessly badly.

A step up is RNN (Recurrent Neural Networks), which also create a 'vector' that incorporates an abstraction of the the previous word and is fed, iteratively, back into itself for the next word. So information is transmitted for many words along a sequence, but get progressively degraded.LSTMs (Long Short-term Memory) systems are also recurrent, a more complicated system. They're recurrent, with feedback connections, rather than just feed-forward. He doesn't fully explain, but they are able to selectively forget content.

Then along came the 'transformer', in the paper "All you need is attention". Which somehow magically pays attention only to the relevant parts of the existing sentence(s) and being feed-forward only. This makes it parallelisable, scaling with however much hardware you throw at it, because the processing steps are not all dependant on the previous. A huge revolution in deep learning efficacy *and* efficiency.

We don't get a step-by-step of how the system processes data. But he talks about image classifiers that can show what part of a picture they were paying attention to. Which can help with interpretability and knowing why they've made errors.

• 2017-10-25 Generative Adversarial Networks (GANs) - In game playing, the NN system can play against itself. But when training an image recognition discriminator, the NN training can be made more efficient using an adversarial generator network that deliberately focuses on examples that it classifies less accurately. It tries to maximise the discriminator's error rate. The generator gets fed random noise and takes on the inverse of the discriminator's gradient descent connection weight adjustment values. The dimensional inputs on the generator can be varied smoothly to move around its latent space, to morph images smoothly. Averaging examples can give vectors that add specific features to a generated image, like sunglasses (on a face).

• 2017-06-16 Concrete Problems in AI Safety - Paper's main topics: side effects, reward hacking, scalable oversight (adapting to unspecified situations with minimal additional user input), safe exploration (of possible actions to learn), robustness to distributional shift (different operating context vs training). More about accidents than AGI alignment.

• 2016-05-20 CNN: Convolutional Neural Networks - Mike Pound explains how image classifiers process varying sizes and layouts of image to serialise the data for input to a neural network. They run through creating stacks which process for edge and corner detections, etc (which I think is like the back of the human retina does), also down sampling. This is still kind of magic for me, as I couldn't figure out how to sanitise my night sky constellation photos for my 2nd year ANN coursework (back in 2006).

► 2023-03-29 "Pause Giant AI Experiments" Open Letter:

All over the media today (radio news, even), stories stemming about this open letter. Co-signed by many prominent AI experts, hosted on the future of life website, which is largely funded by Musk. And of course most the articles lead with his name, for better and worst.

AI Explained (YouTube channel) posted this great breakdown summary, touching on relevant related research:

► 2023-03-30 "Shut It All Down"!

My first thought with the news, above, was what the AI safety experts think of it. In a Time article (via Twitter), Yudkowski makes a much more stark warning of the likelihood that "everyone dies". He pushes a much more strident proposal to internationally outlaw any largescale use of GPUs to train AI models. Rolling the limit *downwards* over time, as more efficient training algorithms become smarter. Bombing any suspicious (suspected) GPU processing centres in rouge states. Even escalating the chances of nuclear exchanges(!) in preference to allowing creation of super-human AI, before we've undertaken the monumentally hard task of alignment. 😶

► 2023-04-03 Context:

The letter and Eliezer Yudkowsky's (EY) Time article have caused division within the AI/machine learning Twitter sphere. The latter was also quoted in this Whitehouse briefing, with somewhat surreal nervous laughter in response to the Fox News reporter saying "we're all going to die!".

EY has a much more formal thorough discussion of "AGI Ruin". With the best rebuttal by Paul Christiano (deep learning engineer), still agreeing with a lot of scary points. But refuting a lot of aspects of EY's scenarios, etc, some of which I'd felt felt unfeasible. Another lengthy rebuttal (by Quintin Pope - less critically acclaimed) on the LessWrong forum (which EY founded).

This Machine Learning Street Talk interview with Connor Leahy is the best podcast I've watched for making the "we're all gonna die!" issue of AGI approachable, clear and upbeat, even. Also starts with idiosyncrasies of LLMs and him starting out reverse engineering GPT2, etc. Before moving into a grounded discussion on the intersection of rationalists (like EY and Bostrom), autism, hyper-empathy over-optimisers & consequentialism vs deontology. Really useful for contextualising and I found myself personally identifying with the guy, too, in terms of being autistic but distinct from them as a social group.

► 2023-04-05 Stanford AI Index Report:

This is a massive overview (via Reddit) of the entire field, in terms of mapping out the applications, economic values/impacts, governance, etc. Although it's period ends in late 2022, so it doesn't cover the recent madness.

A concise summary, apparently by GPT-4 (commented here):

- Industry surpasses academia in significant machine learning model releases.

- Traditional AI benchmarks experiencing performance saturation; new benchmarks emerging [to make room for increased task capabilities].

- AI impacts environment both positively (optimizing energy usage) and negatively (carbon emissions).

- AI accelerates scientific progress in fields like hydrogen fusion and antibody generation.

- [AI accelerates itself! - NVidia uses ML to design more efficient GPUs and DeepMind's AlphaTensor more efficient matrix manipulation algorithms, key to NN processing.]

- Rapid rise in AI misuse incidents, as documented by AIAAIC database.

- AI-related job demand increases across nearly all American industrial sectors.

- First decrease in year-over-year private AI investment in a decade.

- AI adoption plateaus, but adopting companies continue to reap benefits.

- Policymaker interest in AI grows, with increased legislation and mentions in parliamentary records.

- Chinese citizens feel most positive about AI products and services; Americans among least positive.

|

| Private enterprise (the big tech companies) have clearly been the only entities positioned to chase scaling up. |

|

| Machine Learning is still the relatively new kid in town, overtaking the bread-and-butter research. |

I've been struggling, or busy with things, so haven't manage to keep note of all the AI content I've been consuming, in the last couple week. Apart from a few Robert Miles YT vid summaries (filled in above). Highlights from the last couple of days:

|

| He asserts that GPT-4's only weakness is in planning, plus it can't learn long term without retraining. |

• Connor Leahy - on FLI YouTube (via Twitter), explaining his conception of Cognitive Emulation (CoEm) system. Making an ensemble system of below AGI black-box intelligence system, linked through to a white-box software module(s), via chain of reasoning like system that makes the output bounded and human interpretable. Maybe having many of these modules, so the whole system becomes like an artificial corporation staffed with many John Von Neumann cognitive systems, running faster than human. They'd be devoid of emotion, drives, etc, and dependant on a human operator for agency, I think.

• Max Tegmark (celebrity physicist, cosmologist, effective altruist) on the Lex podcast, was explaining how his main motivation behind creating and signing the letter calling for a 6 month halt was to provide the public pressure that would help tech conscious CEOs be able to justify slowing down their headlong rush towards AGI. Due to competitive pressure from less savvy shareholders, etc. The "suicidal" race to the bottom, being described repeatedly as Moloch. Giving examples of this force being overcome by evolution of compassion, gossip, law & order, nuclear non-proliferation, banning human cloning, etc.

Also on the positive side, that human cloning may have happened in China. But only once and the scientist in now in prison. They are also already very strict on chatbot models, because of not wanting to lose control. So the international AI arms race is not so hopeless, if Western leadership takes a stance.

|

| Waluigi, antagonist achetype. |

► 2023-04-19 Emad Mostaque, GPT inference hardware:

• Emad Mostaque - Is CEO Co-Founder of Stability AI, best known for the open source free stable diffusion image generator, but apparently working on many other things, including a large LLM. They are partnered with Amazon Web Services for super-computing hardware (including the Ezra-1 UltraCluster, the 5th most powerful in the world).

|

| 2022 Dec 22 YouTube video. |

• I looked up Emad following a reddit reference to him, talking about the rollout of the 6 month rollout of new NVidia H100 TPUs (machine learning GPUs). That Sam Altman's announcement of no training on GPT-5 should be taken in the context of Microsoft probably still waiting on this hardware to build out their next super-computer cluster. Apparently the H100s allow much bigger scaling, from the current NASA equivalent 600 chip systems, limited by interconnect bandwidth, up to 30k-100k chip supercomputers.

No comments:

Post a Comment

I'm very happy to see comments, but I need to filter out spam. :-)